Crisis Developer: how to stabilize fast and avoid repeats

Production incidents hurt revenue and trust. A crisis developer is a short-term, hands-on intervention: contain the impact, find and fix the root cause, harden the system, and hand over a clear plan so it doesn’t happen again. Examples may reference PHP/Laravel, but the approach is stack-agnostic.

What this role is — and why it exists

A crisis developer joins when the usual delivery cadence no longer helps: incidents reach P0/P11, conversion falls, queues stall, or integrations destabilize core flows. The goal isn’t heroism or rewriting everything, but managed recovery: stabilize, find the root cause, close systemic gaps, and hand knowledge back to the team.

How we work: three tightly scoped stages

1) Stabilize — containment & service restore

- Rollback or feature-flag risky parts; temporarily disable non-critical features that amplify the blast radius.

- Turn on telemetry; capture artifacts (logs, traces, dumps, recent DB migrations).

- Objective: business-critical paths (sign-in, search, cart, checkout) work predictably again.

2) Fix — remove the root cause, not just symptoms

- RCA: race conditions in queues, missing idempotency in payment callbacks, unindexed DB queries, misconfigured timeouts.

- Remedy: code & config changes plus policies (retries, timeouts, limits, locks, idempotency).

- Regression tests safeguard against the bug returning through a side door.

3) Prevent — harden and monitor

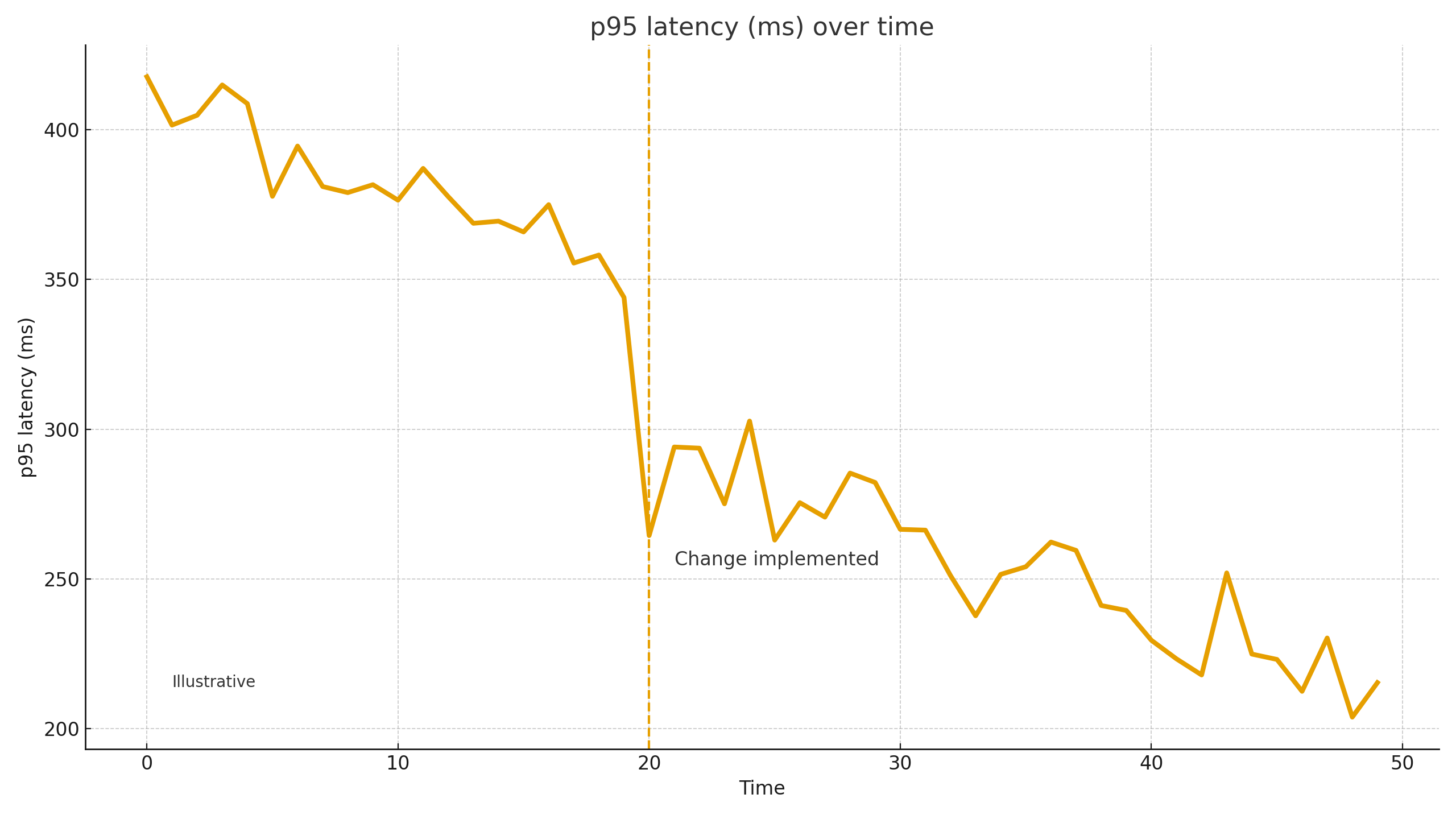

- Business-level alerts (payment error rate, surge in 5xx, p95 degradation) and clear ownership.

- Runbooks for common incidents; simple, actionable checklists.

- Define SLOs in business terms: what “working” means and how it’s measured.

About the numbers — careful and honest

Numbers in crisis work are illustrative, not promises. On similar projects, after removing a bottleneck we’ve seen p95 of a key API drop by ~30–60%, 5xx and payment errors down ~40–80% thanks to idempotency and retry/timeout policies, and checkout conversion rebound by +5–20%. MTTR often falls by multiples once alerts and a simple runbook exist. Final outcomes depend on architecture, code quality, traffic profile, and process maturity.

1) P0 / P1. In common incident priority schemes, P0 is a total blocker: critical unavailability of the product or a key business flow (e.g., payments widely failing). P1 is very high priority: a major functional degradation impacting many users or money, but not a total outage.

2) p95 (95th percentile of response time). A performance metric: the value under which 95% of requests complete. If checkout p95 = 2.4s, then 95% of users complete that step faster than 2.4s, while the slowest 5% take longer. Managing p95 targets the “painful tail” that hurts UX and conversion.

Get in touch

Get a

Crisis Developer

We jump into outages, failed releases, and P0 (production-blocking) bugs—triage, safe hotfix or rollback, and a clear next step.